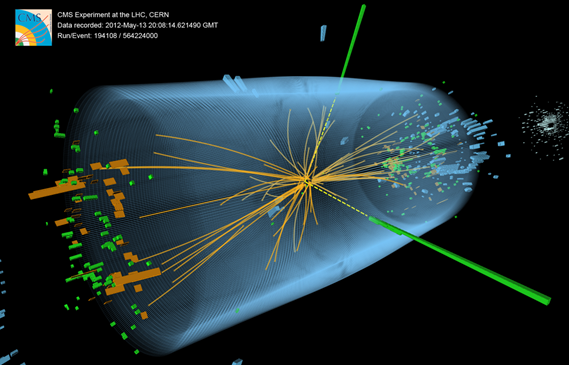

Collisions at the Large Hadron Collider (LHC) generate particles that decay into complex shapes or other particles. The LHC instruments record the passage of each particle as a series of electronic signals and send the data to the CERN Data Center for digital reconstruction: the reports are stored as a collision event.

Up to a billion particle collisions can occur every second inside the LHC detectors. There are so many events that a pre-filtering system is needed to recognize the most potentially interesting events, but even so, the LHC experiments produce about 90 petabytes (90 million gigabytes) of data per year. For comparison, all the printed material in the world weighs about 200 petabytes. That means that, in one year, the LHC research teams must analyze information equivalent to almost half of all the printed material in the world.

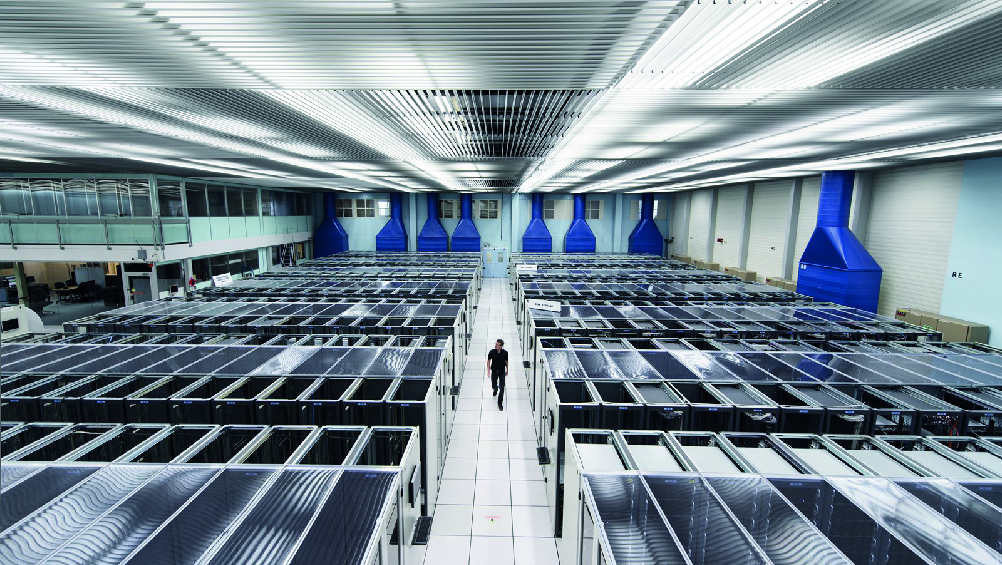

Such a large amount of data requires state-of-the-art data analysis tools. One of the tasks of the Saphir Millennium Institute's research team is to develop data analysis systems that facilitate the processing of the gigantic volume of information generated by the LHC experiments.

3D visualization of a proton collision event recorded at the Large Hadron Collider's CMS detector in 2012. Credits: Thomas McCauley, Lucas Taylor and CERN. Source: CERN.